Method

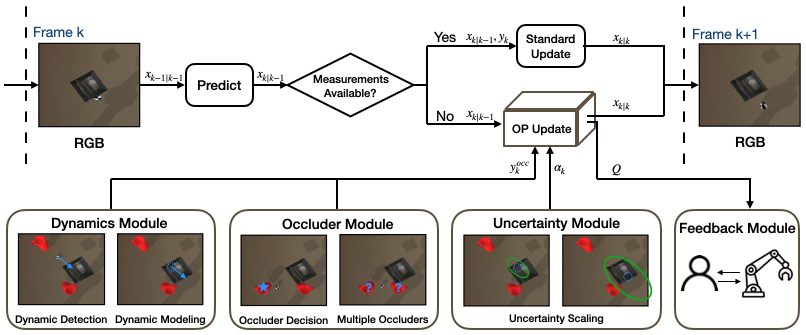

Object Permanence Filter

Following the predict-update cycle, the OPF introduces OP update when no measurements are available for the object been tracked. OP update consists of i) dynamics module to help detect and model the dynamics of the object, ii) occluder module to help decide the occluder and deal with multiple occluders, and iii) uncertainty module to update convariance matrix. The feedback module monitors the uncertainty of the updates by tracking the spectral norm of the update covariance matrices. It can be used to change the behavior of the robot or indicate to the human operator when the uncertainty is above a safety threshold \(\epsilon_{safe}\).

Results

Comparative Results

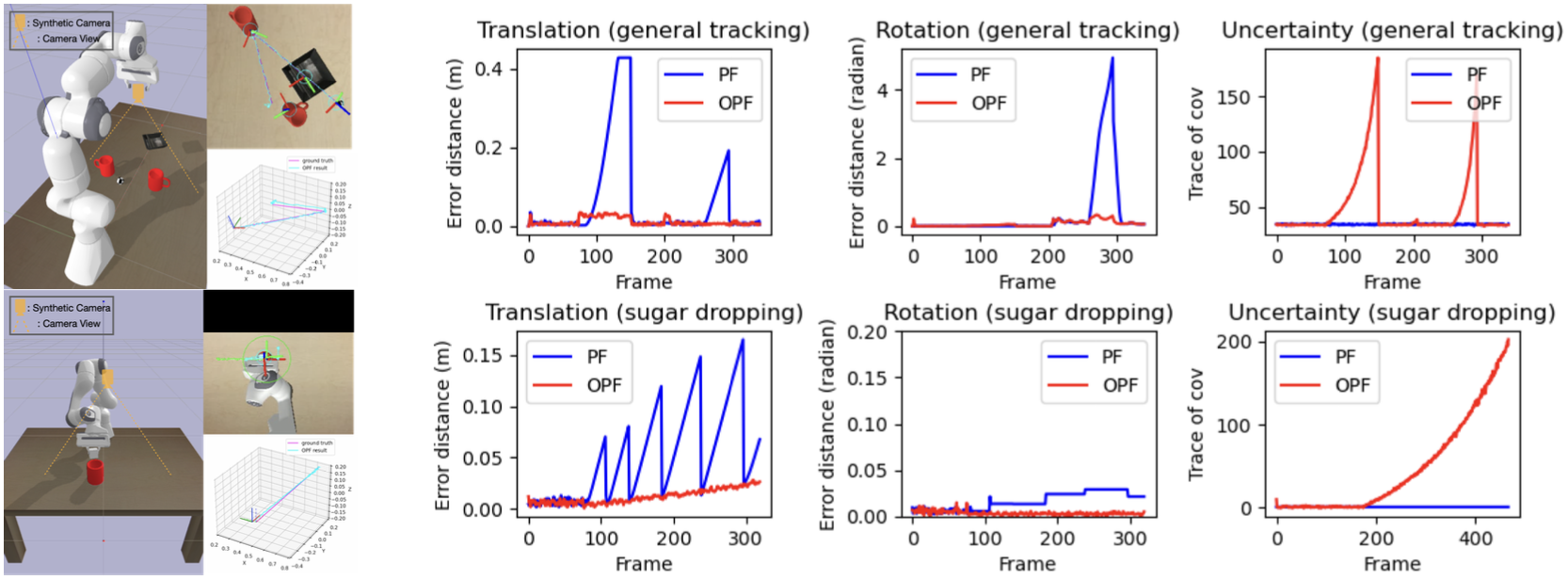

(top row) General object permanence (OP) tracking experiment, (bottom row) sugar-dropping experiment (inspired by MaskUKF). The X-axis for all plots denote \(k\)-th camera frame. (1st column) simulation snapshots, (2nd-3rd columns) tracking error distances of translation and rotation of the occluded object given by \(\textcolor{blue}{\text{PF (blue)}}\) and \(\textcolor{red}{\text{OPF (red)}}\) (4th column) traces of \(Q\) for the tracked object.

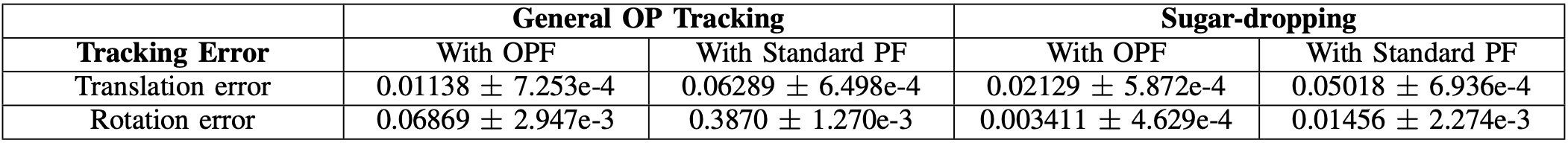

Numerical results for general OP tracking (experiment 1) and sugar-dropping (experiment 2) over 5 runs

Conclusion and Future Work

In this work, we propose a set of assumptions and rules to computationally embed object permanence into the particle filter to form the object permanence filter (OPF), which is an extended 6-DoF filter robust to heavy and prolonged occlusion scenarios in interactive tasks providing plausible tracking. We show that the OPF works well in simulation and hardware experiments, and due to its agnostic nature can be easily applied to multiple existing 6-DoF trackers achieving real-time performance. In the future, we plan to adopt physics-based object/human dynamics. Even by just considering the objects as freely moving 3D point masses and the human as a constrained 3D point mass, we can begin to diversify our modeling abilities. Finally, some parameters like \(\delta\) and \(\epsilon_{\text{occ}}\) can be dependent on the actual 3D shape of the object to get more generalizable thresholds for moving and occlusion.